In my previous blog post, Saving input and output with sp_execute_external_script, I was exploring the possibilities how to capture the R code that external procedure sends it internally to the Launchpad.exe program. And a blog comment by reader Bob gave me additional push to write the second part to same topic. Thank you Bob for sharing this with us.

Bob was explained that how they are doing, and you can read all about it here. To recap, R code (as well as any additional packages) is stored on local file system, it gets copied, zipped, transferred and inserted to SQL table. Calling sp_execute_external_script everything gets unzipped and executed.

I will not comment on the solution Bob provided, since I don’t know how their infrastructure, roles, security is set up. At this point, I am grateful for his comment. But what I will comment, is that there is no straightforward way or any out-of-the-box solution. Furthermore, if your R code requires any additional packages, storing the packages with your R code is not that bad idea, regardless of traffic or disk overhead. And versioning the R code is something that is for sure needed.

To continue from previous post, getting or capturing R code, once it gets to Launchpad, is tricky. So storing R code it in a database table or on file system seems a better idea.

Starting with original sample R code:

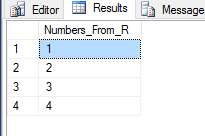

EXEC sys.sp_execute_external_script @language = N'R' ,@script = N' d <- InputDataSet c <- data.frame(Num_V1 = c(1,2,3)) c OutputDataSet <- c' ,@input_data_1 = N'SELECT 1 AS Nmbrs_From_R' WITH RESULT SETS ((Numbers_From_R INT));

We can create SQL table for R code to be persistent and always available.

CREATE TABLE R_code (id INT ,R NVARCHAR(MAX)) INSERT INTO R_code SELECT 1, ' d <- InputDataSet c <- data.frame(Num_V1 = c(1,2,3)) c OutputDataSet <- c' -- (1 row(s) affected) DECLARE @r_code NVARCHAR(MAX) SELECT @r_code = R FROM R_code WHERE id = 1 EXEC sys.sp_execute_external_script @language = N'R' ,@script = @r_code ,@input_data_1 = N'SELECT 1 AS Nmbrs_From_R' WITH RESULT SETS ((Numbers_From_R INT));

This will yield same results. To have R code and T-SQL code side by side, I would suggest to store T-SQL in table as well.

DROP TABLE IF EXISTS R_code CREATE TABLE R_code (id INT ,R NVARCHAR(MAX) ,SQLC NVARCHAR(MAX)) INSERT INTO R_code SELECT 1, ' d <- InputDataSet c <- data.frame(Num_V1 = c(1,2,3)) c OutputDataSet <- c','SELECT 1 AS Nmbrs_From_R' -- (1 row(s) affected) DECLARE @r_code NVARCHAR(MAX) DECLARE @sql_code NVARCHAR(MAX) SELECT @r_code = R FROM R_code WHERE id = 1 SELECT @sql_code = SQLC FROM R_code WHERE id = 1 EXEC sys.sp_execute_external_script @language = N'R' ,@script = @r_code ,@input_data_1 = @sql_code WITH RESULT SETS ((Numbers_From_R INT));

Now, the best thing to do, is to add some logging to the R_code table and some versioning. Easiest way to achieve this by using Temporal Table.

TEMPORAL TABLE

Rewrite the original table:

CREATE TABLE R_code ( id INT IDENTITY(1,1) ,CombinationID INT NOT NULL CONSTRAINT PK_ComboID PRIMARY KEY ,R NVARCHAR(MAX) ,SQLC NVARCHAR(MAX) ,Valid_From DATETIME2 GENERATED ALWAYS AS ROW START NOT NULL ,Valid_To DATETIME2 GENERATED ALWAYS AS ROW END NOT NULL ,PERIOD FOR SYSTEM_TIME (Valid_From, Valid_To) ) WITH (SYSTEM_VERSIONING = ON);

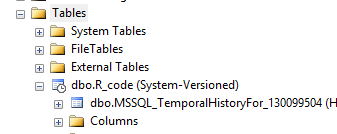

Please note, that table will be represented slightly differently (see the clock in the icon).

Besides actual table (or Temporal Table), system automatically creates history table where all the changes are being kept.

Once this is done, I can store T-SQL and R-code.

INSERT INTO R_code (CombinationID, R, SQLC) SELECT 1,' d <- InputDataSet c <- data.frame(Num_V1 = c(1,2,3)) c OutputDataSet <- c','SELECT 1 AS Nmbrs_From_R' -- (1 row(s) affected)

Now I can run the query same way as before:

DECLARE @r_code NVARCHAR(MAX) DECLARE @sql_code NVARCHAR(MAX) SELECT @r_code = R FROM R_code WHERE CombinationID = 1 SELECT @sql_code = SQLC FROM R_code WHERE CombinationID = 1 EXEC sys.sp_execute_external_script @language = N'R' ,@script = @r_code ,@input_data_1 = @sql_code WITH RESULT SETS ((Numbers_From_R INT));

Suppose that there are changes either to R code or SQL Code committed, and I will simulate this change with an UPDATE query:

-- INSERT ANOTHER CombinationID = 1, with changed R Code UPDATE R_code SET R = ' d <- InputDataSet c <- data.frame(Num_V1 = c(1,2,3,4)) c d OutputDataSet <- c' ,SQLC = 'SELECT 1 AS Nmbrs_From_R' WHERE CombinationID = 1 -- (1 row(s) affected)

The best part is that system maintains the versioning automatically and I – as an end user – don’t need to worry about changing the original T-SQL code that executes R Script. So once again I can execute the same query:

DECLARE @r_code NVARCHAR(MAX) DECLARE @sql_code NVARCHAR(MAX) SELECT @r_code = R FROM R_code WHERE CombinationID = 1 SELECT @sql_code = SQLC FROM R_code WHERE CombinationID = 1 EXEC sys.sp_execute_external_script @language = N'R' ,@script = @r_code ,@input_data_1 = @sql_code WITH RESULT SETS ((Numbers_From_R INT));

And now, I will get the results from updated R-script or T-SQL code.

FILE TABLE

Another way (among many) is to use File Table. In one of my previous blog posts, I covered how to create and configure File Table. In this scenario, we will consider following. Create a file, that will contain R code and store it with *.R extension. And upload it to the directory, where File Table is considering this file-stream data to be landed or stored.

Quickly check the configuration:

--- Check configurations SELECT DB_NAME(database_id) AS DbName ,non_transacted_access ,non_transacted_access_desc ,directory_name ,* FROM sys.database_filestream_options WHERE DB_NAME(database_id) = db_name() --'FileTableRChart'

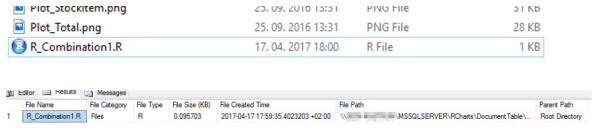

By checking where my R file is residing:

SELECT FT.Name AS [File Name] ,IIF(FT.is_directory=1,'Directory','Files') AS [File Category] ,FT.file_type AS [File Type] ,(FT.cached_file_size)/1024.0 AS [File Size (KB)] ,FT.creation_time AS [File Created Time] ,FT.file_stream.GetFileNamespacePath(1,0) AS [File Path] ,ISNULL(PT.file_stream.GetFileNamespacePath(1,0),'Root Directory') AS [Parent Path] FROM [dbo].[ChartsR] AS FT LEFT JOIN [dbo].[ChartsR] AS PT ON FT.path_locator.GetAncestor(1) = PT.path_locator WHERE FT.File_type = 'R'

And you can see that I have created R file with the name R_combination1.R

So we can access this using OPENROWSET.

SELECT * FROM OPENROWSET(BULK N'\\****\RCharts\DocumentTable\R_Combination1.R', SINGLE_CLOB) AS R_Code

You can also do this using the master.dbo.xp_cmdshell, whatever suits you better.

Once you have code read from R file, you can simply continue to execute external procedure.

-- Physical Location of FileTable DECLARE @r_code NVARCHAR(MAX) SELECT @r_code = BulkColumn FROM OPENROWSET(BULK N'C:\DataTK\00\R_Combination1.R', SINGLE_CLOB) AS R_Code EXEC sys.sp_execute_external_script @language = N'R' ,@script = @r_code ,@input_data_1 = N'SELECT 1 AS Nmbrs_From_R' WITH RESULT SETS ((Numbers_From_R INT));

And result is the same. In this case you need to consider the usage of BCP, BulkCopy or XP_CMDSHELL. Again, based on your eco-system, what suits you best.

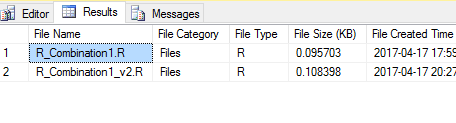

With file table versioning can be a bit of work-around. In addition, you should implement some naming convention to store files correctly or add additional logic to storing and keeping the changes.

So in my case, I am keeping versioning on FileName level, which can be done using T-SQL or renaming the file on file system and later creating hierarchies with files.

As always, Code is available at GitHub.

Happy coding!

[…] article was first published on R – TomazTsql, and kindly contributed to […]

LikeLike

[…] Tomaz Kastrun shares an unorthodox way of collecting historical R code changes: […]

LikeLike

Great write up!

I do have a small question on the coding approach. I’m not too versed on the execute external sproc, but it seems to me that using the input_data_1 parm has no effect. Am I mistaken or is there a larger picture that I’m missing?

Thanks again for the article.

LikeLike

Hi Tomaz,

Great article as I hook into filetable technologies quite a lot. I’m trying to set the working directory using the UNC of a filetable path but R just outputs an error when using sp_execute_external_script.

The working directory is \\SERVER1\Projects\FileTable\BlueGrass

It works fine when called from R Studio.

The error in SSMS is:

Msg 39004, Level 16, State 20, Line 0

A ‘R’ script error occurred during execution of ‘sp_execute_external_script’ with HRESULT 0x80004004.

Msg 39019, Level 16, State 2, Line 0

An external script error occurred:

Error in setwd(folder) : cannot change working directory

Calls: source -> withVisible -> eval -> eval -> setwd

Error in execution. Check the output for more information.

Error in eval(expr, envir, enclos) :

Error in execution. Check the output for more information.

Calls: source -> withVisible -> eval -> eval -> .Call

Execution halted

Can R in SQL Server 2017 work with files hosted on FileTable?

Could it be a permissions error with Filetable but then I’ve granted the user account sysadmin?

Thanks,

Ritesh

LikeLike